Introduction

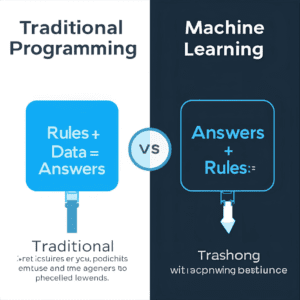

To the average user, Machine Learning (ML) feels like magic. You feed an image of a cat into a computer, and it says “Cat.” You ask it to write a poem, and it rhymes.

But under the hood, there is no magic. There is only math—specifically, statistics and linear algebra interacting with massive datasets.

For developers and tech enthusiasts, understanding these mechanics is no longer optional. Whether you want to build your own models or simply understand the tools you use, you need to know what happens inside the “Black Box.” This guide breaks down the three core paradigms of Machine Learning that power everything from Netflix recommendations to self-driving cars.

1. The Core Concept: It’s All About “Weights”

At its simplest level, a Machine Learning model is a mathematical function. Think back to high school algebra: $y = mx + b$.

-

$x$ is the input (data).

-

$y$ is the output (prediction).

-

$m$ and $b$ are the adjustable parameters.

In a neural network, we have millions (or billions) of these adjustable parameters, which we call Weights.

When a model is “learning,” it isn’t reading a book. It is simply adjusting these weights slightly, over and over again, until its output matches the desired result. It minimizes the “Error” (the difference between its guess and the real answer) using a process called Gradient Descent.

2. Paradigm 1: Supervised Learning (The Teacher)

This is the most common form of ML today. It powers spam filters, face recognition, and medical diagnosis.

-

How it works: The model is given a “Labeled Dataset.” This acts like an answer key. It sees a picture of a dog labeled “Dog” and a picture of a muffin labeled “Muffin.”

-

The Process: It guesses, checks the answer key, and adjusts its weights.

-

Real-World Example: Your email spam filter. You (the user) Mark an email as “Spam.” That is a label. The model learns that “Urgent Money Transfer” + “Unknown Sender” = Spam.

Key Algorithm: Linear Regression, Decision Trees, Support Vector Machines.

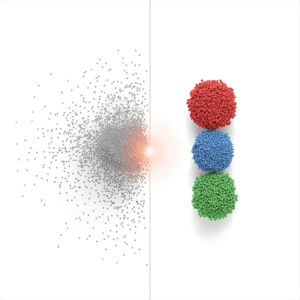

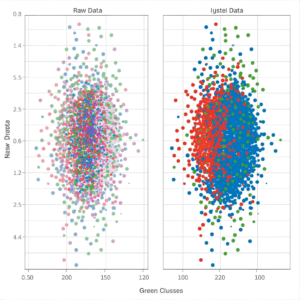

3. Paradigm 2: Unsupervised Learning (The Explorer)

What if we don’t have labels? What if we just dump a massive pile of data on the computer and say, “Find patterns”?

-

How it works: The model looks for hidden structures or similarities in the data without being told what they are.

-

The Process: It groups similar data points together. This is called Clustering.

-

Real-World Example: Customer Segmentation. An e-commerce site analyzes millions of purchases and realizes that “People who buy diapers” also often “buy beer” (a famous real-world correlation). It discovered a group humans didn’t know existed.

Key Algorithm: K-Means Clustering, Principal Component Analysis (PCA).

4. Paradigm 3: Reinforcement Learning (The Gamer)

This is how we teach robots to walk and AI to beat humans at Chess and Go.

-

How it works: The model (Agent) is placed in an environment and given a goal. It gets a “Reward” (+1 point) for doing something right and a “Penalty” (-1 point) for doing something wrong.

-

The Process: It tries random things (Trial and Error). Over millions of attempts, it learns the strategy that maximizes the total reward.

-

Real-World Example: A self-driving car in a simulation. It gets points for staying in the lane and loses points for hitting a cone. Eventually, it learns to drive perfectly without being explicitly programmed with traffic rules.

Key Algorithm: Q-Learning, Deep Q-Networks (DQN).

5. Deep Learning: The Neural Network Revolution

Deep Learning is a specific subset of Machine Learning inspired by the human brain.

Instead of a single mathematical layer, it stacks layers of artificial neurons on top of each other.

-

Layer 1: Detects edges (vertical lines, horizontal lines).

-

Layer 2: Combines edges to detect shapes (circles, squares).

-

Layer 3: Combines shapes to detect features (eyes, wheels).

-

Layer 4: Recognizes the object (Cat, Car).

This “hierarchy” of understanding allows Deep Learning models (like GPT-4) to handle incredibly complex data like language and video.

Conclusion: The Future is Hybrid

We are moving toward systems that combine these methods. For example, Self-Supervised Learning (how LLMs are trained) uses parts of the data to predict other parts, effectively creating its own labels.

For the aspiring Data Scientist, the path is clear: Don’t just learn to import a library like TensorFlow or PyTorch. Learn the math behind the curtain. Understanding why a model fails is infinitely more valuable than knowing how to copy-paste code that makes it run.